INFORMATION SYSTEMS. COMPUTER SCIENCES. ISSUES OF INFORMATION SECURITY

- The study sets out to justify the relevance and investigate approaches for solving the problem of managing the number of simultaneously functioning software robots of various types under conditions of limited computational resources and changes in sets of executable tasks.

- The developed method for generating statistical data based the results of applying the sequential local optimization method is used to identify deficient and non-deficient computational resources.

- Some results of working in the multi-functional center of RTU MIREA software robots developed on the Аtоm.RITA platform are outlined.

Objectives. The study sets out to justify the relevance and investigate approaches for solving the problem of managing the number of simultaneously functioning software robots of various types under conditions of limited computational resources and changes in sets of executable tasks.

Methods. A proposed solution is based on models and methods of scenario management, linear programming, inventory management, queuing theory, and machine learning. The described methods are valid for different compositions and preconditions for generating initial data, as well as ensuring the relevance horizons of the obtained solutions.

Results. The initial data is obtained via the presented approach for determining the computational resource parameters for operating a single software robot. The resources are determined by analyzing the composition of the software and information services used by an actual software robot. Problem statements and mathematical models are developed for cases involving scenario management and linear programming methods. Methods for real-time management of the number of software robots and their sequential local optimization are proposed based on the abovementioned solution sequences. The developed method for generating statistical data based the results of applying the sequential local optimization method is used to identify deficient and non-deficient computational resources. Some results of working in the multi-functional center of RTU MIREA software robots developed on the Atom.RITA platform are outlined.

Conclusions. The emerging problem of managing the number of simultaneously operating software robots of various types for cases involving scenario control methods and linear programming is formalized. This problem is relevant in the field of automation of business processes of organizations. The use of mathematical methods for solving this problem opens up opportunities for expanding the functional capabilities of robotic process automation platforms, as well as increasing their economic efficiency to create competitive advantages by optimizing the use of IT infrastructure components.

- The aim of this work is to develop approaches, tools and technology for detecting vulnerabilities in hardware at an early design stage, and to create a methodology for their detection and risk assessment, leading to recommendations for ensuring security at all stages of the computer systems development process.

- In order to detect vulnerabilities in hardware at an early design stage, a special semi-natural simulation stand was developed.

- A scanning algorithm using the Remote Bitbang protocol is proposed to enable data to be transferred between OpenOCD and a device connected to the debug port.

Objectives. The development of computer technology and information systems requires the consideration of issues of their security, various methods for detecting hardware vulnerabilities of digital device components, as well as protection against unauthorized access. An important aspect of this problem is to study existing methods for the possibility and ability to identify hardware errors or search for errors on the corresponding models. The aim of this work is to develop approaches, tools and technology for detecting vulnerabilities in hardware at an early design stage, and to create a methodology for their detection and risk assessment, leading to recommendations for ensuring security at all stages of the computer systems development process.

Methods. Methods of semi-natural modeling, comparison and identification of hardware vulnerabilities, and stress testing to identify vulnerabilities were used.

Results. Methods are proposed for detecting and protecting against hardware vulnerabilities: a critical aspect in ensuring the security of computer systems. In order to detect vulnerabilities in hardware, methods of port scanning, analysis of communication protocols and device diagnostics are used. The possible locations of hardware vulnerabilities and their variations are identified. The attributes of hardware vulnerabilities and risks are also described. In order to detect vulnerabilities in hardware at an early design stage, a special semi-natural simulation stand was developed. A scanning algorithm using the Remote Bitbang protocol is proposed to enable data to be transferred between OpenOCD and a device connected to the debug port. Based on scanning control, a verification method was developed to compare a behavioral model with a standard. Recommendations for ensuring security at all stages of the computer systems development process are provided.

Conclusions. This paper proposes new technical solutions for detecting vulnerabilities in hardware, based on methods such as FPGA system scanning, semi-natural modeling, virtual model verification, communication protocol analysis and device diagnostics. The use of the algorithms and methods thus developed will allow developers to take the necessary measures to eliminate hardware vulnerabilities and prevent possible harmful effects at all stages of the design process of computer devices and information systems.

MULTIPLE ROBOTS (ROBOTIC CENTERS) AND SYSTEMS. REMOTE SENSING AND NON-DESTRUCTIVE TESTING

- The issues of design and implementation of new generation tethered high-altitude ship-based systems were considered.

- A rational type of aerodynamic design for unmanned aerial vehicles was determined based on existing tethered platforms.

- The optimal architecture of the tethered system was defined and justified. The paper presents the appearance and solution for placement onboard the ship, and describes its operation.

- The main initial parameters for designing high-altitude systems such as take-off weight, optimal lift altitude, maximum power required for operation, structure of the energy transfer system, as well as deployment and lift time to the design altitude were selected and calculated.

Objectives. Currently, UAVs are actively used in many military and civilian fields such as object surveillance, telecommunications, radar, photography, video recording, and mapping, etc. The main disadvantage of autonomous UAVs is their limited operating time. The long-term operation of UAVs on ships can be ensured by tethered high-altitude systems in which the power supply of engines and equipment is provided from the onboard energy source through a thin cable tether. This paper aims to select and justify the appearance of such system, as well as to calculate the required performance characteristics.

Methods. The study used methods of systemic and functional analysis of tethered system parameters, as well as methods and models of the theory of relations and measurement.

Results. The issues of design and implementation of new generation tethered high-altitude ship-based systems were considered. A rational type of aerodynamic design for unmanned aerial vehicles was determined based on existing tethered platforms. The optimal architecture of the tethered system was defined and justified. The paper presents the appearance and solution for placement onboard the ship, and describes its operation. The main initial parameters for designing high-altitude systems such as take-off weight, optimal lift altitude, maximum power required for operation, structure of the energy transfer system, as well as deployment and lift time to the design altitude were selected and calculated.

Conclusions. The methodology for calculating the necessary characteristics described in the paper can be used for developing and evaluating tethered high-altitude systems. These systems are capable of performing a wide range of tasks, without requiring a separate storage and launch location, which is especially important in the ship environment. The system presented herein possesses significant advantages over well-known analogues.

- The aim of this work is to create an effective iterative algorithm for the tomographic reconstruction of objects with large volumes of initial data.

- A reconstruction algorithm was developed on the basis of the use of partial system matrices corresponding to the dichotomous division of the image field into partial annular reconstruction regions.

- A 2D and 3D digital phantom was used to show the features of the proposed reconstruction algorithm and its applicability to solving tomographic problems.

Objectives. The purpose of this work was to create an effective iterative algorithm for the tomographic reconstruction of objects with large volumes of initial data. Unlike the convolutional projection algorithm, widely used in commercial industrial and medical tomographic devices, algebraic iterative reconstruction methods use significant amounts of memory and typically involve long reconstruction times. At the same time, iterative methods enable a wider range of diagnostic tasks to be resolved where greater accuracy of reconstruction is required, as well as in cases where a limited amount of data is used for sparse-view angle shooting or shooting with a limited angular range.

Methods. A feature of the algorithm thus created is the use of a polar coordinate system in which the projection system matrices are invariant with respect to the rotation of the object. This enables a signification reduction of the amount of memory required for system matrices storage and the use of graphics processors for reconstruction. Unlike the simple polar coordinate system used earlier, we used a coordinate system with a dichotomous division of the reconstruction field enabling us to ensure invariance to rotations and at the same time a fairly uniform distribution of spatial resolution over the reconstruction field.

Results. A reconstruction algorithm was developed on the basis of the use of partial system matrices corresponding to the dichotomous division of the image field into partial annular reconstruction regions. A 2D and 3D digital phantom was used to show the features of the proposed reconstruction algorithm and its applicability to solving tomographic problems.

Conclusions. The proposed algorithm allows algebraic image reconstruction to be implemented using standard libraries for working with sparse matrices based on desktop computers with graphics processors.

MODERN RADIO ENGINEERING AND TELECOMMUNICATION SYSTEMS

- The results of fundamental research on electrodynamic effects of vector-wave deformation of nonstationary fields of sub-nanosecond configuration are presented as a means of identifying and authenticating signal radio images.

- Neural network techniques for cumulant formation of radio genomes of signal radio images are proposed on the basis of pole-genetic and resonant functions.

- A radiogenome, representing the unique authenticator of a radio image, is shown to be formed on the basis of physically unclonable functions determined by the structure and set of radiophysical parameters of the image.

- Cumulant features of signal radio images identified on the basis of pole-genetic and physically unclonable resonant functions of small-sized objects are revealed.

Objectives. Radiophysical processes involving the electrodynamic formation of signal radio images diffusely scattered by the signature of small-sized objects or induced by the near field of radio devices are relevant for identifying radiogenomic (cumulant) features of objects in the microwave range in the development of neuroimaging ultra-short pulse (USP) signal radio vision systems, telemonitoring, and near-radio detection. The paper sets out to develop methods and algorithms for vector analysis of radio wave deformation of nonstationary fields forming a signal radio image based on radiophysical and topological characteristics of small-sized objects; to develop software and hardware for registration and neural network recognition of signal radio images, including methods for the synthesis and extraction of signal radiogenomes using digital twins of objects obtained through vector electrodynamic modeling; and to analyze signal radio images induced by elements of printed topology of electronic devices.

Methods. The study is based on statistical radiophysics methods, time-frequency approaches for wavelet transformation of USP radio images, numerical electrodynamic methods for creating digital twins of small-sized objects, as well as neural network authentication algorithms based on the cumulant theory of pole-genetic and resonant physically unclonable functions used in identifying signal radio images.

Results. The results of fundamental research on electrodynamic effects of vector-wave deformation of nonstationary fields of sub-nanosecond configuration are presented as a means of identifying and authenticating signal radio images. Neural network techniques for cumulant formation of radio genomes of signal radio images are proposed on the basis of pole-genetic and resonant functions.

Conclusions. A radiogenome, representing the unique authenticator of a radio image, is shown to be formed on the basis of physically unclonable functions determined by the structure and set of radiophysical parameters of the image. Cumulant features of signal radio images identified on the basis of pole-genetic and physically unclonable resonant functions of small-sized objects are revealed.

- The application of optimal processing methods in bistatic radar enables a synthetic aperture based on scattered satellite navigation system signals.

- In order to improve the accuracy of estimates, the signal-to-noise ratio needs to be increased by combining coherent accumulation (aperture synthesis) and incoherent accumulation (aggregating measurements from different spacecraft).

- The signal processing methods and receiver structure proposed in this work onboard nanosatellites allow aperture synthesis to be achieved with realizable hardware requirements.

Objectives. The development of radar remote sensing systems based on the reception of signals of navigation satellite systems reflected from the surface enables a constellation of nanosatellites to be deployed, in order to perform radar surveying of the Earth’s surface. The aim of this work is to develop the principles of construction of onboard bistatic remote sensing systems on nanosatellites, in order to assess the energy potential and possibilities for its increase.

Methods. The optimal processing method in onboard bistatic radar systems is a development of known analytical methods of optimal processing in monostatic systems. The calculation of the energy potential is based on the experimental data obtained by other authors.

Results. The utilization of signals from navigation satellite systems for surface sensing is a promising and developing area. The USA and China have deployed satellite constellations to perform remote sensing using reflected signals of navigation satellites. An algorithm for optimal processing in such systems, which realizes the principle of aperture synthesis, was developed, and the energy potential of bistatic synthetic aperture radar was calculated. In order to achieve this processing, the proposed scheme uses a standard navigation receiver to form reference signals.

Conclusions. The application of optimal processing methods in bistatic radar enables a synthetic aperture based on scattered satellite navigation system signals. In order to improve the accuracy of estimates, the signal-to-noise ratio needs to be increased by combining coherent accumulation (aperture synthesis) and incoherent accumulation (aggregating measurements from different spacecraft). The signal processing methods and receiver structure proposed in this work onboard nanosatellites allow aperture synthesis to be achieved with realizable hardware requirements.

- Experimental dependencies of the bit error rate on the signal-to-noise ratio and on the intensity of harmonic interference of coherent reception of M-PSK signals in a channel with noise and harmonic interference were obtained using computer simulation modeling. This was done without using correction codes and with Hamming code (7.4) and (15.11) and convolutional encoding with Viterbi decoding algorithm (7,5).

- It is shown that the application of the correction codes effectively corrects errors in the presence of noise and harmonic interference with lower intensity. The correction is ineffective in the presence of high intensity interference.

Objectives. Signals with multiple phase shift keying (M-PSK) exhibiting good spectral and energy characteristics are successfully used in many information transmission systems. These include satellite communication systems, GPS, GLONASS, DVB/DVB-S2, and a set of IEEE 802.11 wireless communication standards. In radio communication channels, the useful signal is affected by various interferences in addition to noise. One of these is harmonic interference. As a result, high intensity harmonic interference practically destroys the reception of M-PSK signals. One of the important requirements for the quality of data transmission is the system error tolerance. There are different ways of improving the quality of information transmission. One of these is the use of corrective encoding technology. The aim of the paper is to assess the noise immunity of a coherent demodulator of M-PSK signals using Hamming codes (7,4) and (15,11), and convolutional encoding with Viterbi decoding algorithm (7,5) when receiving M-PSK signals under noise and harmonic interference in the communication channel.

Methods. The methods of statistical radio engineering, optimal signal reception theory and computer simulation modeling were used.

Results. Experimental dependencies of the bit error rate on the signal-to-noise ratio and on the intensity of harmonic interference of coherent reception of M-PSK signals in a channel with noise and harmonic interference were obtained using computer simulation modeling. This was done without using correction codes and with Hamming code (7.4) and (15.11) and convolutional encoding with Viterbi decoding algorithm (7,5).

Conclusions. It is shown that the application of the correction codes effectively corrects errors in the presence of noise and harmonic interference with lower intensity. The correction is ineffective in the presence of high intensity interference. These results can provide important guidance in designing the reliable and energy efficient system.

MICRO- AND NANOELECTRONICS. CONDENSED MATTER PHYSICS

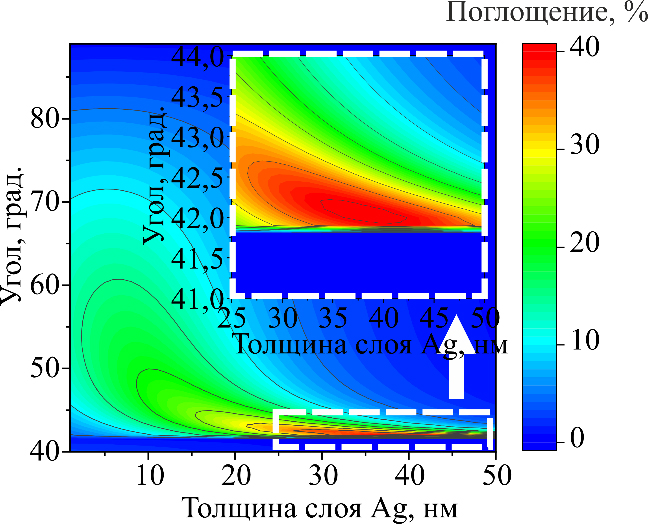

- Parameters were identified at which the maximum area of absorption peak was observed, including the metallic layer thickness and angle of light incidence.

- Based on numerical studies, it can be asserted that the optimal parameters for maximum absorption in the monolayer film are: Ag thickness <20 nm and angle of light incidence between 55° and 85°.

- The maximum absorption in the two-dimensional film was found only to account for a portion of the total absorption of the entire structure.

Objectives. The optical properties of two-dimensional semiconductor materials, specifically monolayered transition metal dichalcogenides, present new horizons in the field of nano- and optoelectronics. However, their practical application is hindered by the issue of low light absorption. When working with such thin structures, it is essential to consider numerous complex factors, such as resonance and plasmonic effects which can influence absorption efficiency. The aim of this study is the optimization of light absorption in a two-dimensional semiconductor in the Kretschmann configuration for future use in optoelectronic devices, considering the aforementioned phenomena. Methods. A numerical modeling method was applied using the finite element method for solving Maxwell’s equations. A parametric analysis was conducted focusing on three parameters: angle of light incidence, metallic layer thickness, and semiconductor layer thickness.

Results. Parameters were identified at which the maximum area of absorption peak was observed, including the metallic layer thickness and angle of light incidence. Based on the resulting graphs, optimal parameters were determined, in order to achieve the highest absorption percentages in the two-dimensional semiconductor film.

Conclusions. Based on numerical studies, it can be asserted that the optimal parameters for maximum absorption in the monolayer film are: Ag thickness <20 nm and angle of light incidence between 55° and 85°. The maximum absorption in the two-dimensional film was found only to account for a portion of the total absorption of the entire structure. Thus, a customized approach to parameter selection is necessary, in order to achieve maximum efficiency in certain optoelectronic applications.

MATHEMATICAL MODELING

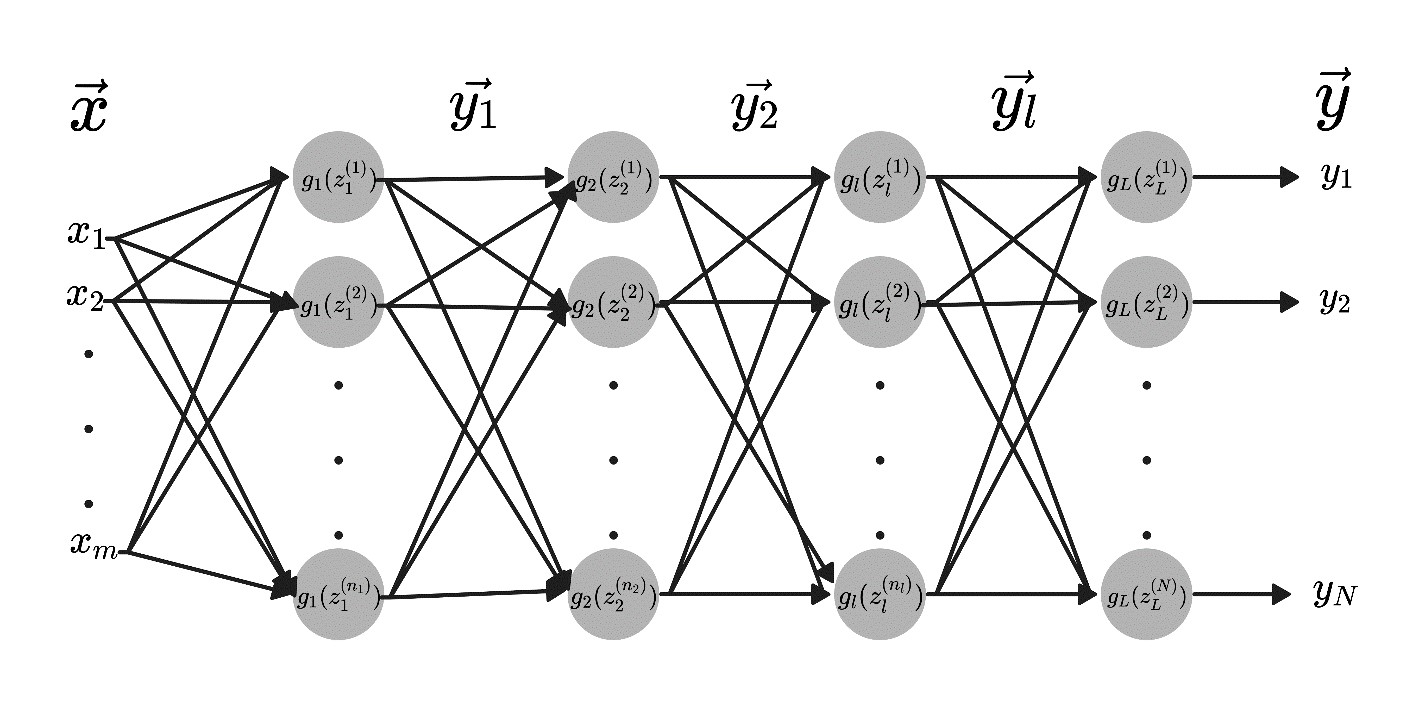

- The study aims to build neural network models of time series (LSTM, GRU, RNN) and compare the results of forecasting with their mutual help and the results of standard models (ARIMA, ETS), in order to ascertain in which cases a certain group of models should be used.

- In the case of seasonal time series, models based on neural networks surpassed the standard ARIMA and ETS models in terms of forecast accuracy for the test period.

- The single-step forecast is computationally less efficient than the integral forecast for the entire target period. However, it is not possible to accurately indicate which approach is the best in terms of quality for a given series.

- Combined models (neural networks for trend, ARIMA for seasonality) almost always give good results.

Objectives. To build neural network models of time series (LSTM, GRU, RNN) and compare the results of forecasting with their mutual help and the results of standard models (ARIMA, ETS), in order to ascertain in which cases a certain group of models should be used.

Methods. The paper provides a review of neural network models and considers the structure of RNN, LSTM, and GRU models. They are used for modeling time series in Russian macroeconomic statistics. The quality of model adjustment to the data and the quality of forecasts are compared experimentally. Neural network and standard models can be used both for the entire series and for its parts (trend and seasonality). When building a forecast for several time intervals in the future, two approaches are considered: building a forecast for the entire interval at once, and step-by-step forecasting. In this way there are several combinations of models that can be used for forecasting. These approaches are analyzed in the computational experiment.

Results. Several experiments have been conducted in which standard (ARIMA, ETS, LOESS) and neural network models (LSTM, GRU, RNN) are built and compared in terms of proximity of the forecast to the series data in the test period.

Conclusions. In the case of seasonal time series, models based on neural networks surpassed the standard ARIMA and ETS models in terms of forecast accuracy for the test period. The single-step forecast is computationally less efficient than the integral forecast for the entire target period. However, it is not possible to accurately indicate which approach is the best in terms of quality for a given series. Combined models (neural networks for trend, ARIMA for seasonality) almost always give good results. When forecasting a non-seasonal heteroskedastic series of share price, the standard approaches (LOESS method and ETS model) showed the best results.

ISSN 2500-316X (Online)