INFORMATION SYSTEMS. COMPUTER SCIENCES. ISSUES OF INFORMATION SECURITY

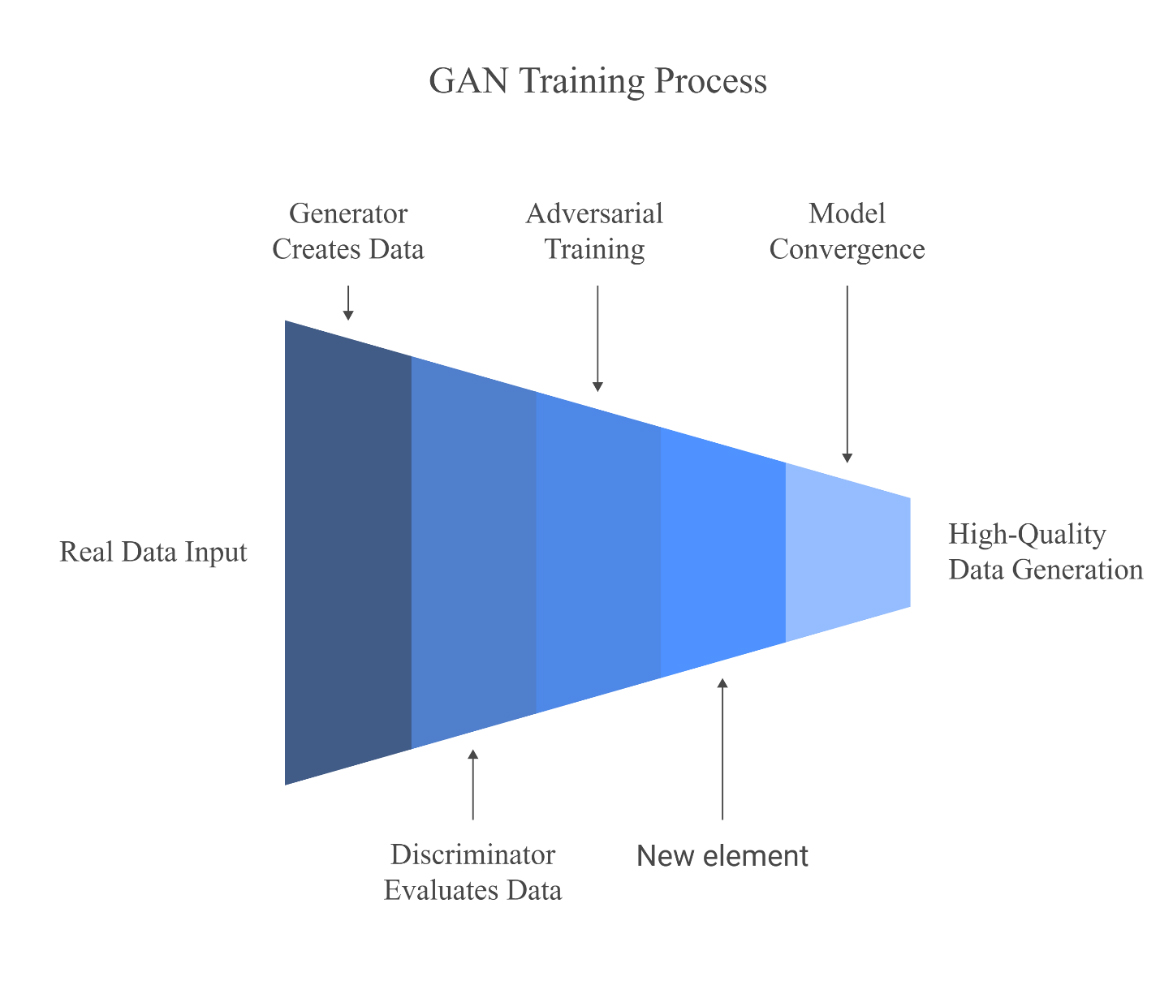

- The use of Generative Adversarial Networks (GANs) to revolutionize cybersecurity and anomaly detection process was evaluated.

- The research focuses on the capabilities of GANs to produce synthetic data and simulate adversarial attacks, as well as identifying outliers and resolving training, instability, and ethical issues.

- GANs enhance security measures through their production of caused datasets resulting in a 25% improvement of detection systems accuracy.

- Given ethical rules regulating their proper use, GANs advance cybersecurity by providing anomaly detection simultaneously with improved training stability and lower operating expenses.

Objectives. This review article sets out to evaluate the use of Generative Adversarial Networks (GANs) to revolutionize cybersecurity and anomaly detection process. The research focuses in particular on the capabilities of GANs to produce synthetic data and simulate adversarial attacks, as well as identifying outliers and resolving training, instability, and ethical issues.

Methods. A systematic review of relevant peer-reviewed articles spanning 2014 through 2024 was undertaken.

Results. The discussion concentrated on two main areas of GAN application: (1) cybersecurity through intrusion detection and adversarial testing; (2) anomaly detection for medical diagnostics and surveillance purposes. The research studied two essential GAN variants named Wasserstein GANs and Conditional GANs for their performance in addressing technical challenges. The assessment of synthetic data quality used the Fréchet Inception Distance and Structural Similarity Index Measure as evaluation metrics.

Conclusions. GANs enhance security measures through their production of caused datasets resulting in a 25% improvement of detection systems accuracy. The technique allows strong adversarial assessment to reveal system weaknesses while helping detect irregularities in data-poor areas for medical diagnostics. High-dimensional tasks demonstrate 40% training instability and lead to 30% output diversity loss. The need for regulatory frameworks becomes essential due to ethical issues, which include the use of deepfakes that result in 25% success rates of biometric system evasion. Given ethical rules regulating their proper use, GANs advance cybersecurity by providing anomaly detection simultaneously with improved training stability and lower operating expenses. Prior versions of GAN-reinforcement learning and additional transparent systems require focused development as part of responsible innovation efforts.

- A scalable method for detecting multi-vector attacks on Internet of Things (IoT) devices was developed.

- The developed hybrid neural network architecture combines convolutional networks for spatial dependence analysis and long short-term memory networks or gated recurrent units representing types of recurrent neural networks for analyzing time dependencies in network traffic.

- The developed model is superior to analogues in terms of accuracy, processing time, and memory usage.

- Hybrid architecture, pruning, and decentralized verification provide effectiveness against multi-vector IoT threats.

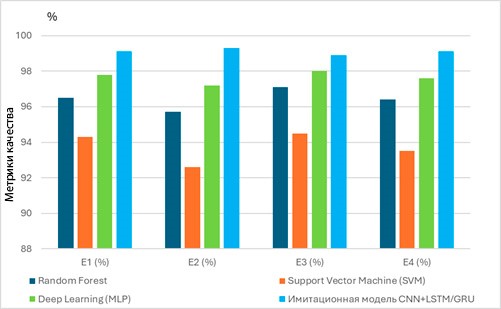

Objectives. The study sets out to develop a scalable method for detecting multi-vector attacks on Internet of Things (IoT) devices. Given the growth of security threats in IoT networks, such a solution must provide high accuracy in detecting attacks with minimal computing costs while taking into account the resource constraints of IoT devices.

Methods. The developed hybrid neural network architecture combines convolutional networks for spatial dependence analysis and long short-term memory networks or gated recurrent units representing types of recurrent neural networks for analyzing time dependencies in network traffic. Model parameters and computational costs are reduced by pruning. A blockchain with a proof of voting consensus mechanism provides secure data management and decentralized verification.

Results. Experiments on the CIC IoT Dataset 2023 showed the effectiveness of the model: the accuracy and F1 measure were 99.1%. This confirms the ability to detect known and new attacks in real time with high accuracy and completeness. Processing time is reduced to 12 ms, while memory usage is reduced to 180 MB, which makes the model suitable for devices with limited resources.

Conclusions. The developed model is superior to analogues in terms of accuracy, processing time, and memory usage. Hybrid architecture, pruning, and decentralized verification provide effectiveness against multi-vector IoT threats.

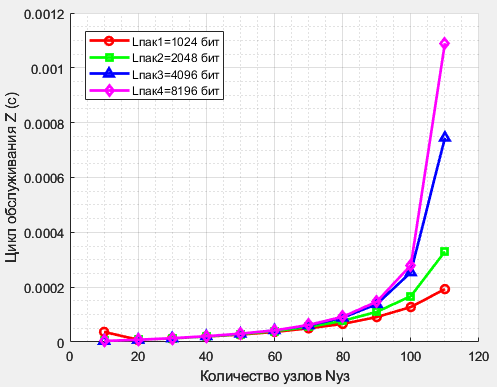

- A mathematical model for information transmission in multimode fiber-optic ring networks using a token-based access method to ensure efficient interaction between Internet of Things (IoT) devices was developed.

- The results confirm that multimode fiber-optic media provide an efficient foundation for IoT infrastructure that offers both high throughput and fault tolerance.

- By incorporating reliability characteristics into the model, it was possible to account for the impact of fiber-optic medium and network node failures on performance.

- Optimizing the parameters of the token-based access method, including time intervals and token transmission policies, significantly improves overall network performance by reducing collision probability and increasing throughput.

Objectives. The study sets out to develop and analyze a mathematical model for information transmission in multimode fiber-optic ring networks using a token-based access method to ensure efficient interaction between Internet of Things (IoT) devices. The work aims to evaluate the probabilistic and time-related characteristics, as well as the reliability and performance of the network infrastructure to optimize data transmission parameters, taking into account the specifics of IoT and the peculiarities of the fiber-optic medium.

Methods. Reliability theory methods are used to assess the network’s resilience to failures and increase its operational efficiency, along with techniques from the theory of stochastic processes to model the dynamics of data transmission under varying loads and approaches from queueing theory to analyze traffic distribution and packet queue management. The Laplace–Stieltjes transform is applied to derive functional equations that describe the probabilistic and time-related data transmission characteristics, enabling precise mathematical modeling of network processes.

Results. The information transmission processes occurring in multimode fiber-optic networks with token access in the context of IoT systems were studied. The temporal characteristics of packet transmission for different classes, including critical IoT device data, were analyzed.

Conclusions. The results confirm that multimode fiber-optic media provide an efficient foundation for IoT infrastructure that offers both high throughput and fault tolerance. By incorporating reliability characteristics into the model, it was possible to account for the impact of fiber-optic medium and network node failures on performance. Optimizing the parameters of the token-based access method, including time intervals and token transmission policies, significantly improves overall network performance by reducing collision probability and increasing throughput. The developed mathematical model provides an effective tool for analyzing and designing local networks based on multimode fiberoptic technologies. This fact is especially important for networks serving critical infrastructure.

MODERN RADIO ENGINEERING AND TELECOMMUNICATION SYSTEMS

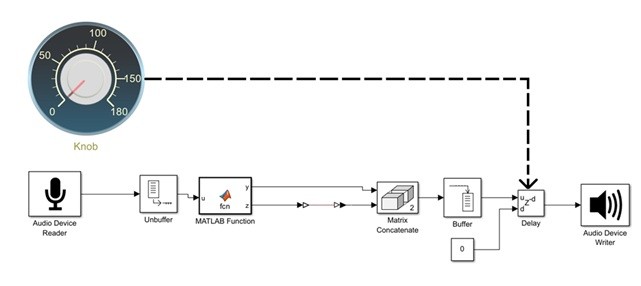

- The study sets out to parametrically investigate the impact of time delays within cyber-physical emulation circuits for signal audio modules.

- It examines how delays introduced by analog-to-digital and digital-to-analog converters of the hardware/software interface, the central processor, and the visual graphical emulation software environment are influenced by factors like the selected data input-output protocol and the block preset configurations: sampling rate, buffer size and time, and the number of channels.

- A novel approach to emulate analog audio devices using cyber-physical SPICE modeling was introduced. Through the use of digital twins, the study investigates the impact of modifiable parameters on signal delays within audio module circuits during cyber-physical emulation.

- Based on these findings, technical guidelines are provided for selecting appropriate delay correction settings between 20 and 120 ms to ensure efficient high-speed audio signal post-processing.

Objectives. The study sets out to parametrically investigate the impact of time delays within cyber-physical emulation circuits for signal audio modules. Specifically, it examines how delays introduced by analog-to-digital and digital-to-analog converters of the hardware/software interface, the central processor, and the visual graphical emulation (VGE) software environment are influenced by factors like the selected data input-output protocol and the VGE block preset configurations such as sampling rate, buffer size and time, and the number of channels.

Methods. Used methods of architectural SPICE modeling of electrical circuits on the VGE Simulink software platforms leverage the resources of the Simscape library and LiveSPICE. Additional methods include those for incorporating differential equations in the numerical analysis of SPICE models designed for analog circuits and techniques for processing experimental data generated from cyber-physical emulation using the built-in Simulink environment and associated laboratory radio measurement tools.

Results. The study introduces a novel approach to emulate analog audio devices using cyber-physical SPICE modeling. Through the use of digital twins, the study investigates the impact of modifiable parameters on signal delays within audio module circuits during cyber-physical emulation. Based on these findings, technical guidelines are provided for selecting appropriate delay correction settings between 20 and 120 ms to ensure efficient highspeed audio signal post-processing.

Conclusions. By configuring the VGE software block’s settings identically to the ASIO data input/output protocol prevalent in audio interface technology (44.1 kHz sampling frequency, 8 buffer size) substantially decreased latency in typical audio module circuit nodes is achieved with cyber-physical emulation built into the VGE LiveSPICE environment. The achieved time delays of 5 ms direct transmission circuit contrast with the 7 ms latency observed in the cyber-physical emulation of the SPICE circuit when both are benchmarked within the VGE Simulink environment. The successful implementation of cyber-physical emulation for SPICE models is achieved through the use of particular settings, such as a 44.1 kHz sampling frequency, buffer sizes ranging from 512 to 1024 samples, and the use of the ASIO data input/output protocol.

- The effectiveness of an adaptive algorithm for suppressing non-fluctuation interference based on the analysis of the spectrum envelope was demonstrated. This algorithm can be used as a means for isolating the envelope of the interference spectrum to enable the formation of the amplitude-frequency response of the notch filter in real time.

- Processing methods for three types of non-fluctuation interference were implemented and tested: harmonic, frequency-shift keying, and phase-shift keying. A signal with quadrature amplitude modulation forms a useful signal for the purposes of the study.

- The developed adaptive notch filter based on spectrum envelope analysis is highly effective in combating harmonic interference to achieve energy gains of 8–9 dB depending on the relative intensity of interference.

Objectives. The rapid advancement of wireless technologies, including IoT and 5G/6G, is accompanied by an increase in the overall level of electromagnetic interference. This sets engineers the task of developing effective methods to suppress such interference, including especially challenging non-fluctuating interference of various kinds. The study aims to implement and analyze the effectiveness of a non-fluctuation interference rejection method using an adaptive filter based on spectrum envelope analysis.

Methods. Mathematical modeling, spectral analysis, and adaptive filtering methods are used in the work. The described approach is based on spectrum envelope extraction for identification and subsequent suppression of nonfluctuation interference.

Results. The effectiveness of an adaptive algorithm for suppressing non-fluctuation interference based on the analysis of the spectrum envelope has been demonstrate. This algorithm can be used as a means for isolating the envelope of the interference spectrum to enable the formation of the amplitude-frequency response of the notch filter in real time. Processing methods for three types of non-fluctuation interference were implemented and tested: harmonic, frequency-shift keying (FSK), and phase-shift keying (PSK). A signal with quadrature amplitude modulation forms a useful signal for the purposes of the study. The experimental results demonstrate the good efficiency of the proposed method. The developed adaptive notch filter based on spectrum envelope analysis is highly effective in combating harmonic interference to achieve energy gains of 8–9 dB depending on the relative intensity of interference. Notably, even as interference intensifies, the filter effectiveness persists, albeit with a slight reduction. The algorithm functions effectively under exposure to narrowband FSK and PSK interference.

Conclusions. The proposed adaptive algorithm for suppressing fluctuation interference based on spectrum envelope analysis is optimally effective in the presence of harmonic interference within the communication channel, but less effective in the presence of more broadband interference. The study is of practical importance for digital communication systems, where high noise immunity is required in a complex electromagnetic environment.

- The proposed “weight–observation time” quality criterion is used to compare different observation planning algorithms that take into account spacecraft priority and total observation time.

- In order to account for the structure of the total observation time, the “weight–observation structure” criterion is introduced.

- It is analytically confirmed that the limited criteria values differ for different scheduling methods.

- The conducted numerical experiment is used to confirm the nature of the change of criteria for different planning methods and parameters included in the criteria.

Objectives. One of the critical tasks of space monitoring is the planning of observations due to the quality and amount of information obtained depending on how well the observation plan is developed. However, the selection of a method for planning spacecraft observations is hampered by a lack of unified criteria for comparing different planning algorithms. Therefore, the work sets out to develop planning quality criteria on the basis of physical observation principles based on radar, radiotechnical, and optical monitoring approaches in order to analytically determine their main parameters and check these parameters numerically.

Methods. The proposed quality criteria are deterministic, limited in energy by signal strength and observation time. The limiting values of the quality criteria for fixed observation time are analytically determined. In order to obtain the values of the quality criteria for four scheduling algorithms, a computational experiment is carried out.

Results. The proposed “weight–observation time” quality criterion is used to compare different observation planning algorithms that take into account spacecraft priority and total observation time. In order to account for the structure of the total observation time, the “weight–observation structure” criterion is introduced. It is analytically confirmed that the limited criteria values differ for different scheduling methods. The conducted numerical experiment is used to confirm the nature of the change of criteria for different planning methods and parameters included in the criteria.

Conclusions. The proposed observation planning quality criteria, which are based on the physical observation principles by radiotechnical and optical means, are used to numerically compare the results of spacecraft observation planning to take into account the priority of observation, as well as observation time and structure (how many and how long are the intervals into which the total observation time is divided). The possibility of using the proposed “weight–observation time” and “weight–observation structure” criteria to compare different planning algorithms is confirmed by computational experiment. Therefore, it is reasonable to use the proposed criteria for optimization of scheduling algorithms or their numerical comparison for different satellite observation conditions.

MATHEMATICAL MODELING

- An exact solution for calculating the normal component of the magnetic induction vector of the parallelepiped-shaped permanent magnet was obtained. Based on this, a straightforward and easy-to-use analytical model of the normal component of the magnetic induction vector was developed, which closely approximates the formula derived for the exact solution.

- The developed analytical model of the normal component of the magnetic induction vector can be used for theoretical development of an analytical model of the useful signal of a measuring system with inductive transmission of information about the parameters of a moving structure to a stationary signal receiver.

Objectives. In a measuring system based on the inductive transmission of information from a moving structure to a stationary signal receiver, the signal carrying useful information about the parameters of the moving structure is formed by a magnetic system containing a permanent magnet mounted on the stationary part of the measuring system. The magnetic field of the permanent magnet (MFPM) determines the magnetic flux, and, consequently, the induction current in a conducting coil located on the moving structure. In order to theoretically justify the parameters of the measuring system including the optimization of its components, a simple and easy-to-use analytical model of the useful signal for determining the requirements for the mathematical description of the MFPM is required. The use of known solutions for developing an analytical model of the useful signal of the measuring system is complicated by the need to use inverse trigonometric functions or the results of numerical calculations. The present work sets out to obtain an exact solution to the problem of calculating the MFPM and on this basis to develop a simple, convenient analytical model of the normal component of the magnetic induction vector (NCMIV) of a permanent magnet used to develop an analytical model of the useful signal.

Methods. The equivalent solenoid method was used along with mathematical analysis approaches.

Results. An exact solution for calculating the normal component of the magnetic induction vector of the parallelepipedshaped permanent magnet was obtained. Based on this, a straightforward and easy-to-use analytical model of the NCMIV was developed, which closely approximates the formula derived for the exact solution.

Conclusions. The developed analytical model of the NCMIV can be used for theoretical development of an analytical model of the useful signal of a measuring system with inductive transmission of information about the parameters of a moving structure to a stationary signal receiver.

Objectives. Triply periodic minimal surfaces are non-intersecting surfaces with zero mean curvature, consisting of elements repeating in three directions of the Cartesian coordinate system. The use of structures based on minimal surfaces in heat engineering equipment is associated with their advantages over classical lattice and honeycomb structures, often used in practice. The aim of the work is to study heat transfer during filtration flow in a porous medium of an incompressible fluid having an ordered macrostructure based on gyroid triply periodic minimal surface.

Methods. In order to solve the problem of heat transfer in a porous medium, the finite difference method is used. As a means of implementing the finite difference method algorithm, the Heat Transfer Solver software was developed in the Python programming language.

Results. The described software program was used to obtain a numerical solution of the heat transfer problem in a porous medium with an ordered macrostructure using the finite difference method. The program functionality enables the investigation of the heat transfer process dynamics and the influence of various parameters on the temperature distribution. The program was used to study the heat transfer process in a porous medium based on gyroid triply periodic minimal surface. Graphical dependencies of the solid framework and fluid temperatures, as well as the heat flux on the coordinate at different time steps, were obtained. Characteristic time intervals with the highest absolute temperature gradient values were identified.

Conclusions. The results of the work, including both the developed software and the obtained temperature dependencies, can be used in a number of engineering problems where it is important to predict the temperature distribution in porous materials under various operating conditions.

- A formation saturated with oil, water, and a steam–gas mixture is theoretically described. A closed system of heat and mass transfer equations is obtained taking into account diffusion-droplet and heat flows and phase transformations.

- A formulated mathematical statement of the model comprises an initial–boundary value problem for equations relating the temperature, saturation, and pressure of the components of the saturating fluid in the formation. Numerical algorithms for solving are developed and their software implementation carried out.

- An application developed for computer implementation of the model provides visualization of the calculation results consisting of several components. Numerical experiments were carried out to study how various factors, such as the properties of the formation sketch and the saturating liquid phase and heater characteristics, affect the thermophysical processes in the formation.

Objectives. An important and urgent task of the oil producing industry is the identification of patterns of thermophysical processes in reservoirs. One approach to improving the efficiency of oil recovery in conditions of hard-to-recover reserves involves thermal action on the reservoir. The construction of mathematical models for describing such processes to optimize production technologies is based on the formation of nonstationary heat flows in the reservoir when a stopped well is heated. The application of mathematical modeling methods considered in the work forms a basis for calculating the distribution dependencies of nonstationary fields of thermophysical characteristics in the reservoir when heating the well to its parameters and the properties of the environments.

Methods. The work is based on heat- and mass-transfer theory along with mathematical physics, analytical and numerical methods, as well as algorithms, computer modeling approaches, and the development of applications using modern programming languages and their libraries.

Results. A formation saturated with oil, water, and a steam–gas mixture is theoretically described. A closed system of heat and mass transfer equations is obtained taking into account diffusion-droplet and heat flows and phase transformations. A formulated mathematical statement of the model comprises an initial–boundary value problem for equations relating the temperature, saturation, and pressure of the components of the saturating fluid in the formation. Numerical algorithms for solving are developed and their software implementation carried out. An application developed for computer implementation of the model provides convenient visualization of the calculation results consisting of several components (modules). Numerical experiments were carried out using the developed software to study how various factors, such as the properties of the formation sketch and the saturating liquid phase and heater characteristics, affect the thermophysical processes in the formation. Conclusions. The developed model can be used to clearly describe nonstationary distributions of thermophysical characteristics formed by thermal and diffusion-droplet flows in the reservoir during heating of a shut-up well. The obtained results expand current understandings of the regularities of thermophysical processes and the properties of the saturating phase in the reservoir under thermal influence.

- The project assessment method and implementation methodology were developed, taking into account customer requirements and the capabilities of the potential contractor, to enable rational decision-making regarding its formulation.

- The method may be applied to establish the optimal requirements and conditions for project implementation in a balanced manner. In contrast to expert methods, the presented analytical method and methodology provide a higher objectivity of the generalized assessment, validity, and effectiveness in making and implementing managerial decisions.

- The methodology’s universal criteria and procedures make it suitable for application to various kinds of assessment and in various areas of project activity, including research and development work.

Objectives. The work sets out to develop a method and methodology for evaluating project activities, including research and development work. The development of this method and its associated methodology is relevant due to the need to provide an analytical assessment of a project based on its main performance indicators, such as the uniqueness of the results and the resources required to produce them. This assessment must take into account both the requirements of the customer and the capabilities of the prospective performers to make an informed decision on its formulation. Currently, the best-known methods for evaluating a planned project are based on economic efficiency. However, the approaches taken by customers and contractors are often different and sometimes contradictory. For example, a customer may minimize the risk of failing to achieve the project goal by setting appropriate requirements and resource costs, while a contractor minimizes the same risks by increasing the requested time and material resources, as well as by adjusting the requirement criteria for the project results, which are based on their ability to fulfill them. The concept of fuzzy sets allows various assessment approaches to be combined to provide informed and coordinated decision-making regarding the feasibility and expediency of setting up and executing a project.

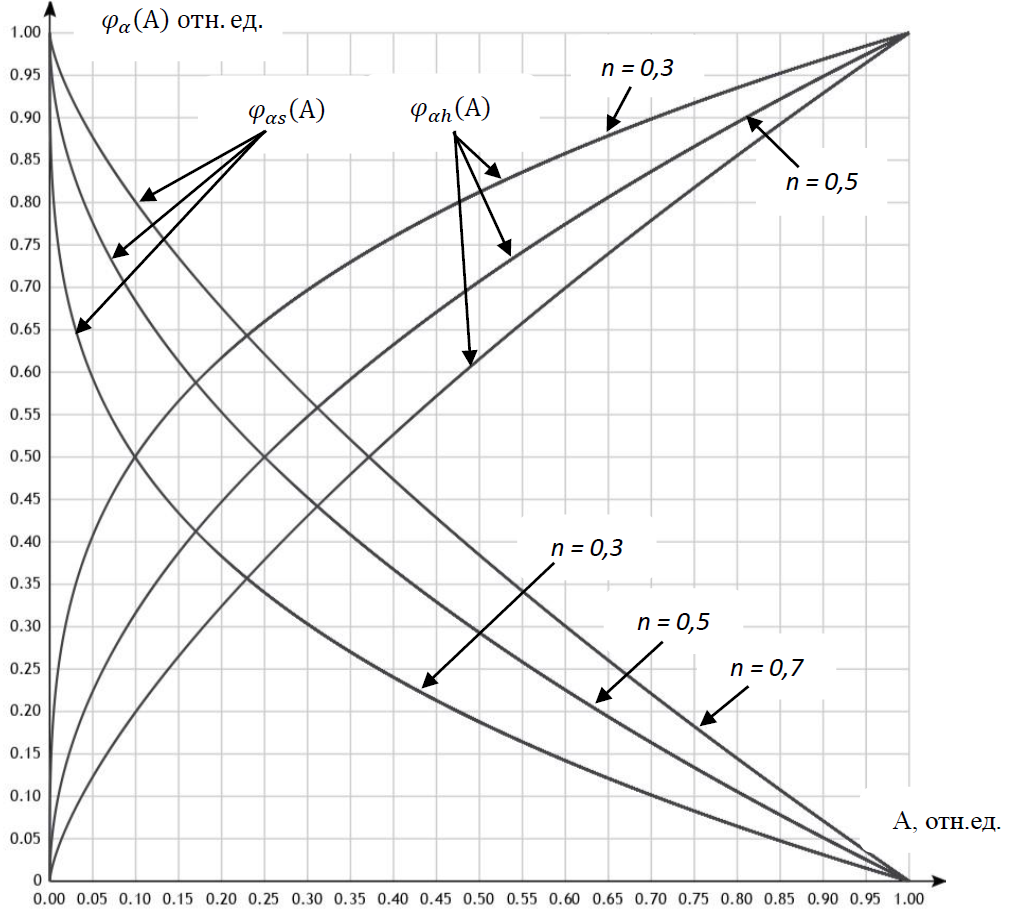

Methods. The developed method and methodology for project evaluation are based on the theory of fuzzy sets and the concept of fuzzy logic, using membership functions to model project parameter estimates.

Results. The project assessment method and implementation methodology were developed, taking into account customer requirements and the capabilities of the potential contractor, to enable rational decision-making regarding its formulation.

Conclusions. The method may be applied to establish the optimal requirements and conditions for project implementation in a balanced manner. In contrast to expert methods, the presented analytical method and methodology provide a higher objectivity of the generalized assessment, validity, and effectiveness in making and implementing managerial decisions. The methodology’s universal criteria and procedures make it suitable for application to various kinds of assessment and in various areas of project activity, including research and development work.

ISSN 2500-316X (Online)